Our project investigates the computational efficiency of Geoffrey Hinton’s novel Forward-Forward (FF) algorithm against traditional backpropagation (BP) methods in neural network training. We aimed to quantify potential improvements in training speed and resource utilisation, particularly relevant given current concerns about AI energy consumption.

We implemented both FF and BP algorithms using PyTorch on the MNIST dataset. The FF algorithm trains the network using two forward passes with positive (ie. real) and negative (ie. fake) data samples, training each hidden layer using a local loss function, therefore eliminating the need for backward propagation of error gradients.

We conducted our experiments on an Nvidia GTX 1080 GPU, with both models trained to achieve 97% accuracy for fair comparison. Using gpustats library, we measured training time, GPU memory usage, utilisation, and power consumption. Code implementations were adapted from open-source repositories with consistent network architectures for both models.

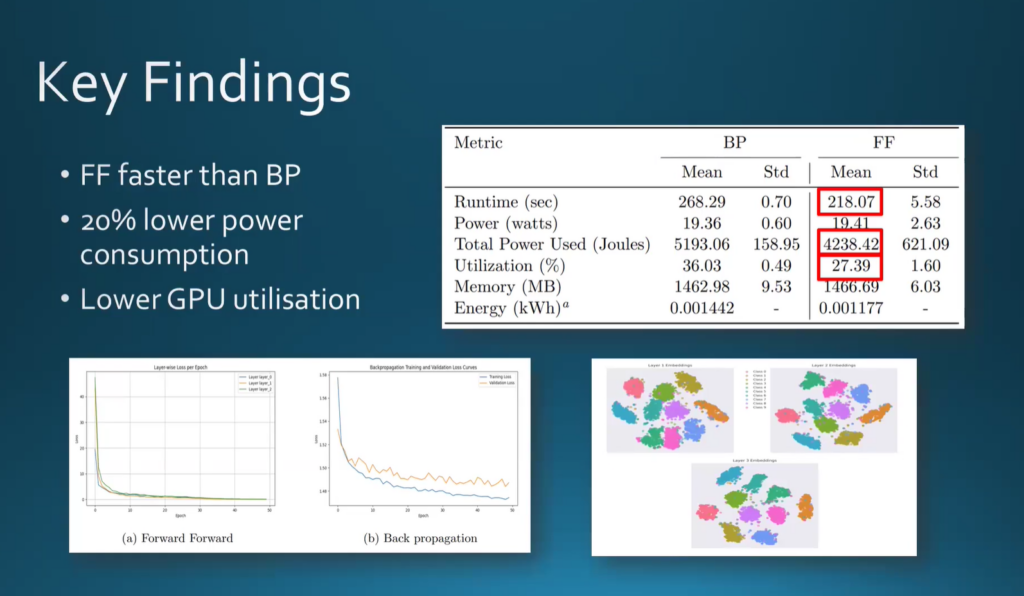

The FF algorithm achieved 97% accuracy 50 seconds faster than BP and consumed 20% less total power. GPU utilization was significantly lower (27.39% vs 36.03%), though memory usage remained similar. Primary limitations and challenges we faced in this project include hardware constraints and implementation complexity, preventing testing on additional datasets like CIFAR-10.

This research provided valuable insights into alternative neural network training methods and their practical implications for resource-constrained applications. This discovery project also enhanced our understanding of machine learning implementations and its challenges, while contributing meaningful data about the Forward-Forward algorithm’s potential benefits to computational efficiency.